Apple's machine learning feels like magic

Over the past year one of the tech stories that I've seen pop up numerous times is that Apple has fallen behind on AI. The general idea being that AI is now to be measured in terms of ChatGBT which has come to represent AI. And, according to this narrative, it follows that since Apple has not produced a similar offering it is behind.

It's a convenient narrative to fall into but it indicates a lack of awareness of what Apple has been doing over the past several years with machine learning. And yes, Apple uses the term machine learning rather than AI. And Apple's offering does not come in the form of a chatbot but rather as a myriad of features to be found throughout its operating systems.

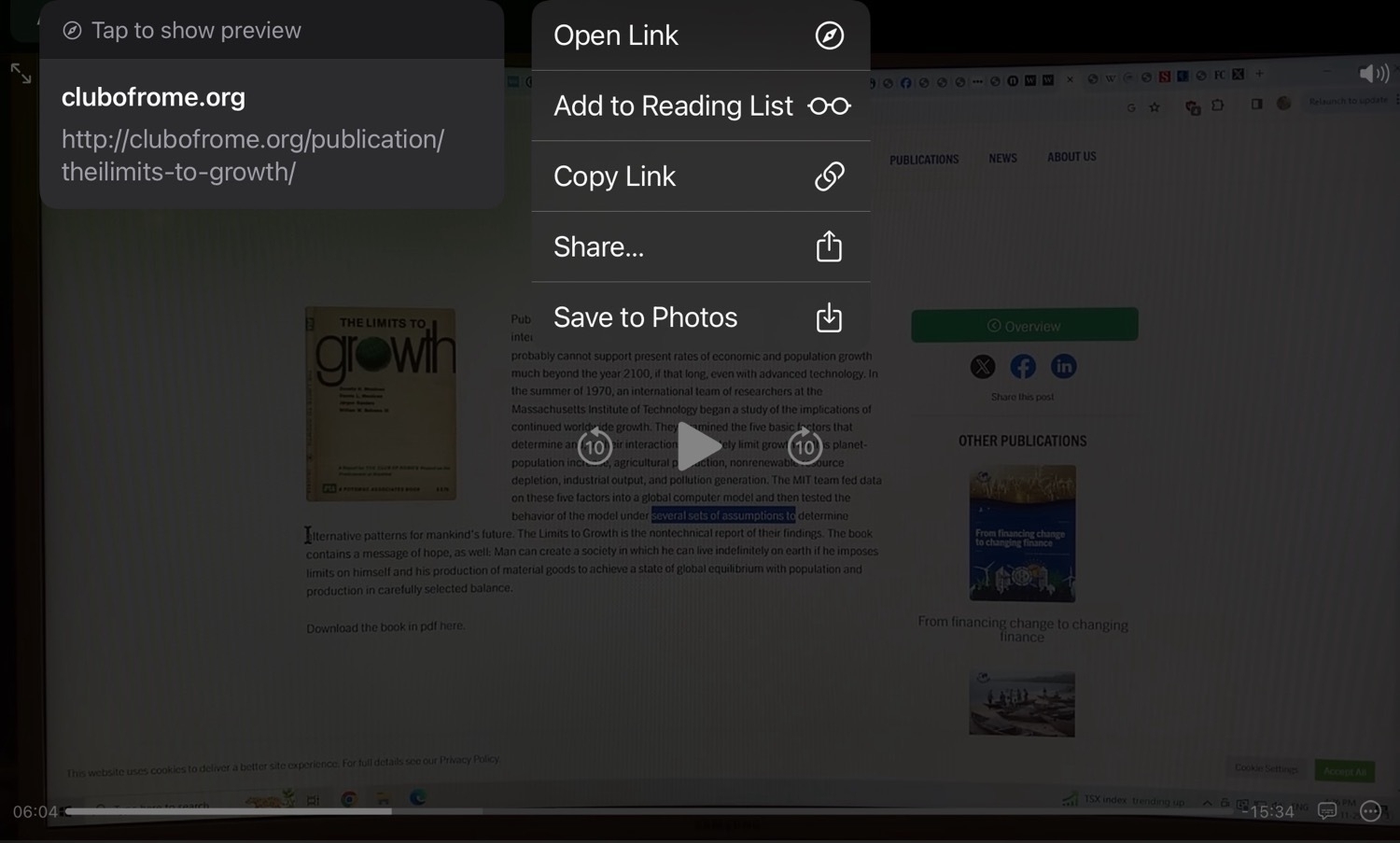

An example that prompted me to start this post. Just moments ago I began watching a video from one of my regular YouTube channels. In it Paul Beckwith is discussing a book synopsis. As he often does he zooms in on his screen and spends time on browser pages of graphs and text. He highlights text as he discusses it. Not for the first time I've wanted to open urls he is discussing. With Apple's machine learning I pause the video, tap/click the url that is visible on the screen in the video:

The url field is pretty small. A pencil is best to select. I not, a trackpad/mouse with cursor also works well the select. In either case a pop-up menu is presented for me to open the url. This is all via Apple's text recognition that now works on any image or paused video.

The url field is pretty small. A pencil is best to select. I not, a trackpad/mouse with cursor also works well the select. In either case a pop-up menu is presented for me to open the url. This is all via Apple's text recognition that now works on any image or paused video.

Not all AI looks like a chatbot! This machine learning feature saves me a great deal of time and is a feature I use several times a week.

Another fun and useful example is Photos' machine learning ID feature. Animals, insects, trees, flowers, food items and more. When viewing an image swipe down to get the info panel. If the app recognizes something you'll see a couple of little stars next to the circle i or it will have a tiny little overlay indicating some sort of ID. If it's a bug you'll see a little bug overlay. If it's a plant you'll see a leaf. tap the circle and you'll see a field to tap for more information. If it's a food item you'll get suggested recipes.

Machine learning also powers language translation throughout the system. I use the excellent Mona app for browsing Mastodon and that developer takes advantage of the feature:

Not only does it work in text areas but I can tap into the image where foreign language is presented in a screenshot. A pop up offers to translate that text as well.

Another recent example is are the new features around scanned forms and pdf forms that don't have form fields. As of the new OS releases in September 2023 Apple devices will now auto-detect form elements from such sources allowing users to type in new data. Take a paper form, scan it and type in your field data. Dictation, voice to text, is another example. Machine learning is everywhere in the OS and the processing is largely on-device rather than cloud based.

Ars Technica has an excellent but somewhat dated article from 2020. Still an interesting read and underscores the point that Apple's been incorporating this tech into both the OS as well as in the processors for years.

Of course much more is coming and in the not too distant future we'll begin to see more obvious features and improvements that people have begun asking for. Most notable, thanks to the visibility of ChatGBT will be improvements to Siri and similar kinds of contextual interactions be they voice or text in nature.

#Apple #iPad #MachineLearning