A check-in with Siri and Apple's Machine Learning

First, to begin with a recent bit of related news from Humane, a company founded by ex-Apple employees that has finally announced it's first product. I bring this up at the beginning of a post about Siri because the purported aim of the product, the Ai Pin, is ambient computing powered by AI. It is a screen-less device informed by sensors/cameras that the user interacts with primarily via voice or a touch-based projection. Humane is positioning the device as a solution for a world that spends too much time looking at screens. The Ai Pin is intended to free us from the screen. It's an interesting idea but, no.

My primary computer is an iPad and then an iPhone. These are supplemented with AirPods Pro, the Apple Watch and HomePods. Of course all of these devices have access to Apple's assistant Siri. It's common among the tech and Apple press to ridicule Siri as stagnant technology left to wither on the vine. A voice assistant that's more likely to frustrate users than enable them in useful ways. The general joke/meme is that Siri's only good for a couple of things and that it often gets even those few things wrong.

But that's not been my experience. In general my experience using Siri has been positive and I've long found it useful in my daily life. I do think there's some truth to the notion that Apple's been fairly conservative in its pushing forward of Siri. As is generally the case Apple goes slowly with careful consideration. I'm not suggesting Siri is perfect or finished. Of course not and yes, there's more to be done.

All that said, I consider it a big win that I have an always available, easy to access voice assistant, that compliments my visual and touch-based computing. I use Siri several times a day with very good results and I’d say that my satisfaction with Siri the voice assistant is pretty high just in terms of the successful responses I have. My primary methods of interaction are via the iPad or iPhone, sometimes with AirPods Pro, using a mix of Hey Siri and keyboard activation.

Here I'm offering just the most basic, 2 point evaluation:

- Successful responses based on current feature set: 9/10

- Innovation and addition of new features: 3/10

What I use on a regular or semi-regular basis with generally very good results:

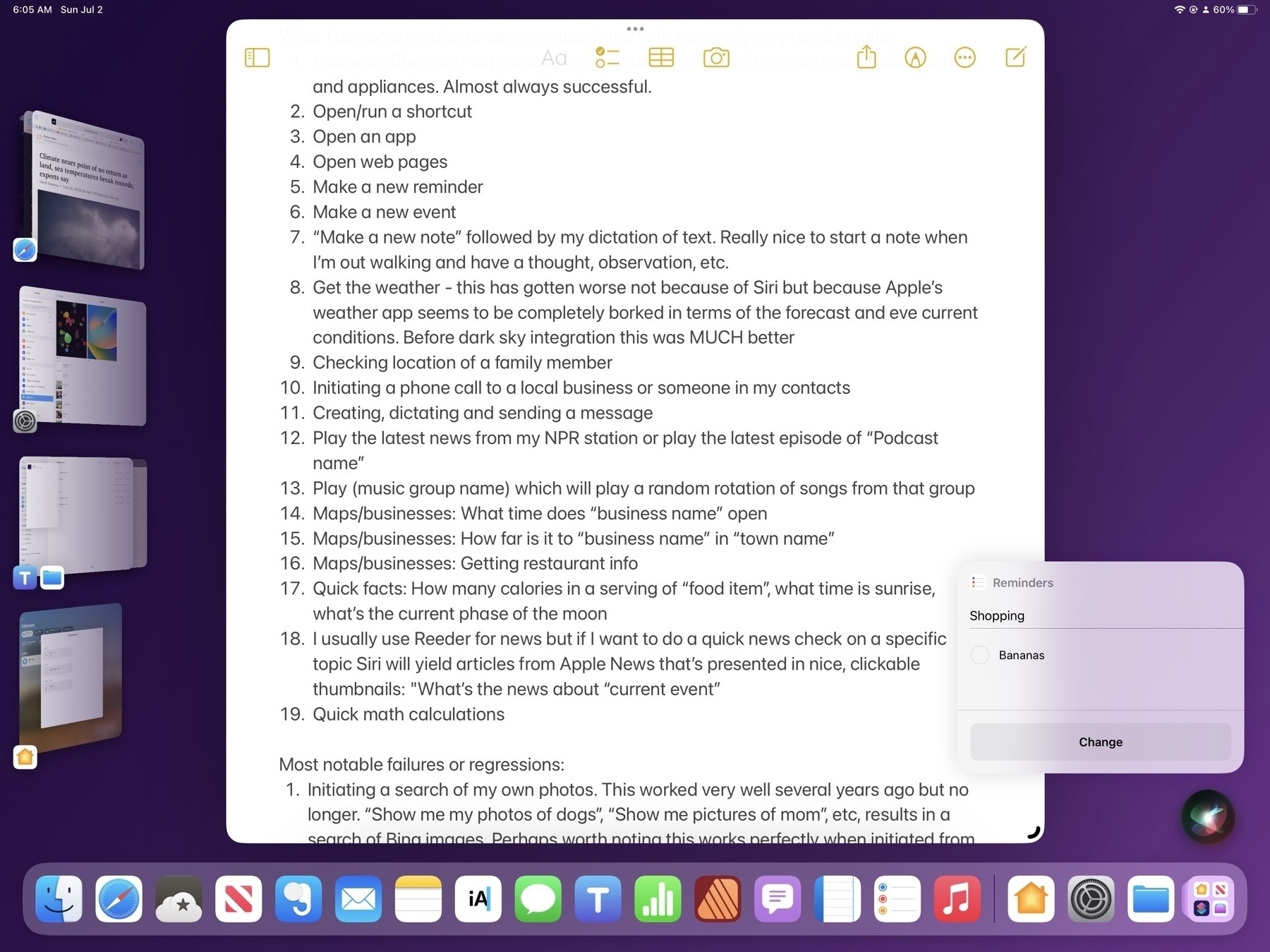

- HomeKit: Checking temperature of various locations with sensors, controlling lights and appliances. Almost always successful.

- Open/run a shortcut

- Open an app

- Open web pages

- Make a new reminder

- Make a new event

- “Make a new note” followed by my dictation of text. Really nice to start a note when I’m out walking and have a thought, observation, etc.

- Get the weather - this has gotten worse not because of Siri but because Apple’s weather app seems to be completely borked in terms of the forecast and eve current conditions. Before dark sky integration this was MUCH better

- Checking location of a family member

- Initiating a phone call to a local business or someone in my contacts

- Creating, dictating and sending a message

- Play the latest news from my NPR station or play the latest episode of “Podcast name”

- Play (music group name) which will play a random rotation of songs from that group

- Maps/businesses: What time does “business name” open

- Maps/businesses: How far is it to “business name” in “town name”

- Maps/businesses: Getting restaurant info

- Quick facts: How many calories in a serving of “food item”, what time is sunrise, what’s the current phase of the moon

- I usually use Reeder for news but if I want to do a quick news check on a specific topic Siri will yield articles from Apple News that’s presented in nice, clickable thumbnails: "What’s the news about “current event”

- Quick math calculations

Most notable failures or regressions that I've found in my use:

- Initiating a search of my own photos. This worked very well several years ago but no longer. “Show me my photos of dogs”, “Show me pictures of mom”, etc, results in a search of Bing images. Perhaps worth noting this works perfectly when initiated from a spotlight search, but fails when initiated from Siri.

- Multiple follow-ups, conversational interactions (not really a feature in the first place). This is a specific feature noted for the upcoming release of iOS 17.

- Asking Siri for recent emails or messages for a contact yields inconsistent results and a cumbersome, difficult interface. If I ask Siri to show me recent emails from a contact it usually only shows one email but sometimes will show more and will read the first email subject line asking if I want more read. If I say no the list disappears. There's no way to interact with the found emails.

In the tech sphere where the news dominated by "AI" for the past 10 months, most recently ChatAI, large language model integration into web search, Microsoft's Copilot and Google's Bard being the most prominent examples, pundits were wondering, what would Apple do to respond? When would they put more effort into Siri and would it include AI of some sort. Or would the voice assistant be left to fall further behind? The not too surprising answer seems to be that Apple will continue to improve Siri gradually on its own terms without being pressured by the efforts and products of others or the calls from tech pundits.

We view AI as huge, and we will continue weaving it into our products on a very thoughtful basis. - Tim Cook.

Siri the voice assistant is just one part of the larger machine learning that powers features like dictation, text recognition, object ID and subject isolation in image files, auto correct, Spotlight and much more. While Siri is a focus point of interaction, the underlying foundation of machine learning is not meant to be a focus, it's not the tool but rather the background context that informs and assists the user as features found in the OS and apps. Machine learning isn't as flashy as ChatGBT and Bard but unlike those services it absolutely and reliably improves my user experience in meaningful ways every day.

For those that haven't used Siri recently Apple provides a few pages with examples of the current feature list: Apple's Siri for iPad help page and Apple's Main Siri page