Siri and the iOS Mesh

Over the past couple years it’s become a thing, in the nerd community, to complain incessantly about how inadequate Siri is. To which I incessantly roll my eyes. I’ve written many times about Siri and it’s mostly positive because my experience has been mostly positive. Siri’s not perfect but in my experience Siri is usually a pretty great experience. A month ago HomePod came into my house and so I’ve been integrating it into my daily flow. I’d actually started a “Month with HomePod” sort of post but decided to fold it into this post because something shifted in my thinking about it over the past day and it has to do with Siri and iOS as an ecosystem.

It began with Jim Dalrymple‘s post over at The Loop: Siri and our expectations. I like the way he’s discussing Siri here. Rather than just complain as so many do he’s breaking it down in terms of expectations per device and the resulting usefulness and meeting of expectations. To summarize, he’s happy with Siri on HomePod and CarPlay but not iPhone or Watch. His expectations on the phone and watch are higher and they are not met to which he concludes: “It seems like such a waste, but I can’t figure out a way to make it work better.”

As I read through the comments I came to one by Richard in which he states, in part:

"I’ve improved my interactions with Siri on both my iPhone 8 and iPad Pro by simply avoiding “hey Siri” and instead, holding down the home button to activate it. Not sure how that’s done on an iPhone X but no doubt there’s a way....Since getting the HomePod I’ve reserved “Hey Siri” for that device and the watch. My iPads and iPhone are now activated via button and yes, it seems better because it’s more controlled, more deliberate and usually in the context of my iPad workflow. In particular I like the feel of activating Siri with the iPad and the Brydge keyboard as it has a dedicated Siri key on the bottom left of the keyboard. The interesting thing about this keyboard access to Siri is that it it feels more instantaneous.A lot of folks gave up on Siri when it really sucked in the beginning and like you, I narrowed my use to timers and such. But lately I’m expanding my use now that I’ve mostly dumped “hey Siri” and am getting much better results. Obviously “hey Siri” is essential with CarPlay but it works well there for some odd reason."

Siri is also much faster at getting certain tasks done on my screen than tapping or typing could ever would be. An example, searching my own images. With a tap and a voice command I’ve got images presented in Photos from whatever search criteria I’ve presented. Images of my dad from 2018? Done. Pictures of dogs from last month? Done. It’s much faster than I could get by first opening the Photos app and then tapping into a search. Want to find YouTube videos of Stephen Colbert? I could open a browser window and start a search which will load results in Bing or type in YouTube and wait for that page to load then type in Stephen Colbert and hit return and wait again. Or, I can activate Siri and say “Search YouTube for Stephen Colbert” which loads much faster than a web page then I can top the link in the bottom right corner to be taken to YouTube for the results.

One thing I find myself wishing for on the big screen of the iPad is that the activated Siri screen be just a portion of the screen rather than a complete take-over of the iPad. Maybe a slide-over? I’d like to be able to make a request of Siri and keep working rather than wait. And along those lines, if Siri were treated like an app allowing me to go back through my Siri request history. The point here is that Siri isn’t just a digital assistant but is, in fact, an application. Give it a persistent form with it’s own window that I can keep around and I think Siri would be even more useful. Add to that the ability to drag and drop (that would come with it’s status as an app) and it’s even better.

Which brings me to voice and visual computing. Specifically, the idea of voice first computing as it relates to Siri, HomePod and others such as Alexa, Google, etc. After a month with HomePod (and months with AirPods) I can safely say that while voice computing is a nice supplement to visual for certain circumstances, I don’t see it being much more than that for me anytime soon, if ever. As someone with decent eyesight and who makes a living using screens, I will likely continue spending most of my days with a screen in front of me. Even HomePod, designed to be voice first, is not going to be that for me.

I recently posted that with HomePod as a music player I was having issues choosing music. With an Apple Music subscription there is so much and I’m terrible at remembering artist names and even worse at album names. It works great to just ask for music or a genre or recent playlist. That covers about 30% of my using playing. But I often want to browse and the only way to do that is visually. So, from the iPad or iPhone I’m usually using the Music app for streaming or the remote app for accessing the music in my iTunes library on my MacMini. I do use voice for some playback control and make the usual requests to control HomeKit stuff. But I’m using AirPlay far more than I expected.

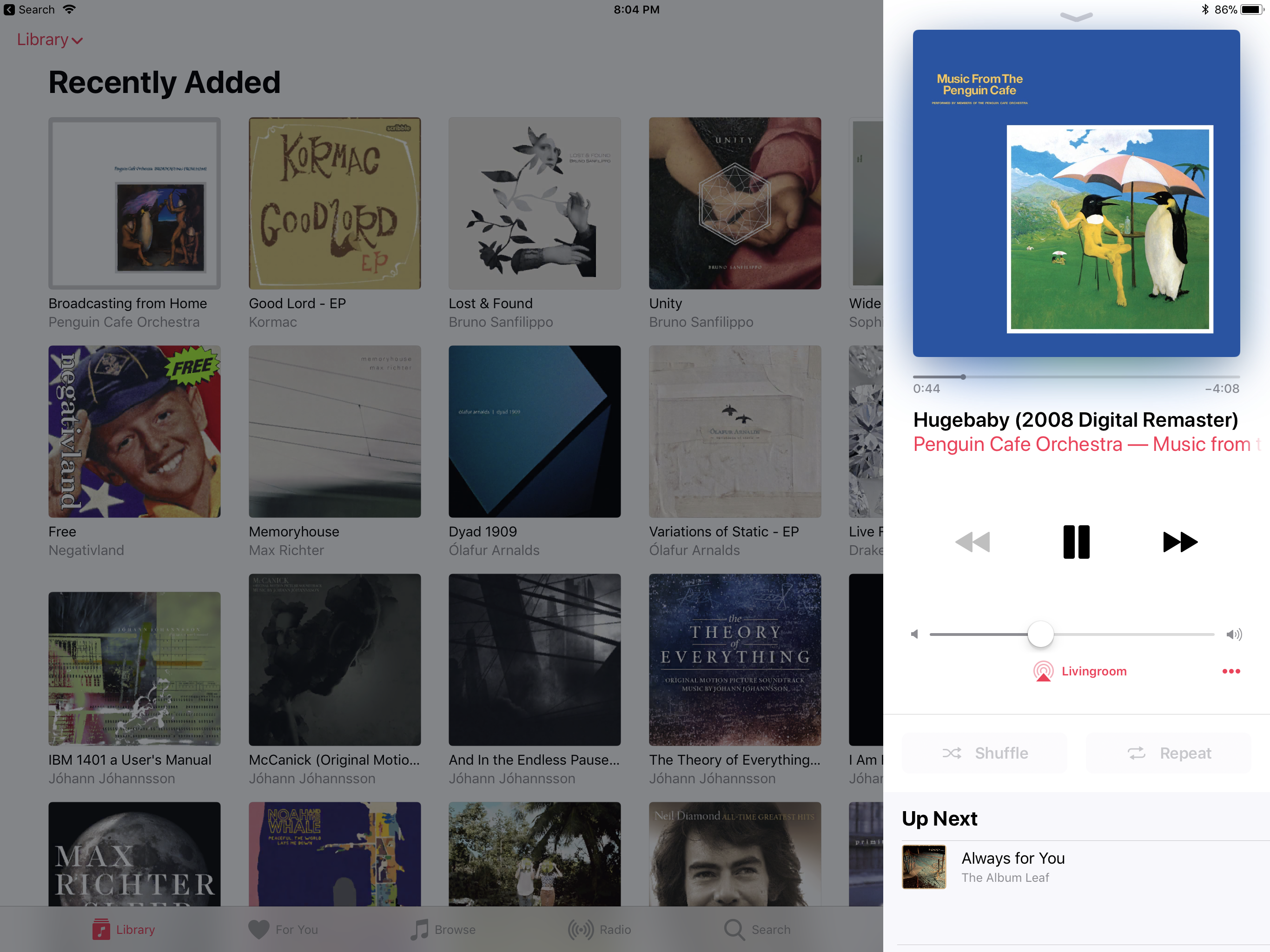

Using the Music app and Control Center from iPad or iPhone is yet another way to control playback.

Apple has made efforts to connect our devices together with things such as AirDrop and Handoff. I can answer a call on my watch or iPad. At this point everything almost always remains in constant sync. Moving from one device to another is almost without any friction at all. What I realize now is just how well this ecosystem works when I embrace it as an interconnected system of companions that form a whole. It works as a mesh which, thanks to HomeKit, also includes lights, a heater, coffee maker with more devices to come in the future. An example of this mesh: I came in from a walk 10 minutes ago and I was streaming Apple Music on my phone, listening via AirPods. When I came inside I tapped the AirPlay icon to switch the audio output to HomePod. But I’m working on my iPad and can control the phone’s playback via Apple Music or Control Center on the iPad or, if I prefer, I can speak to the air to control that playback. A nice convenience because I left the phone on the shelf by the door whereas the iPad is on my lap.

At any given moment, within this ecosystem, all of my devices are interconnected. They are not one device but they function as one. They allow me to interact visually or with voice with different iOS devices in my lap or across the room as well as with non-computer devices in HomeKit which means I can turn a light off across the room or, if I’m staying late after a dinner at a friends house, I can turn on a light for my dogs from across town.

So, for the nerds that insist that having multiple timers is very important, I’m glad that they have Alexa for that. I’m truly happy that they are getting what it is they need from Google Assistant. As for myself, well, I’ll just be over here suffering through all the limitations of Siri and iOS.